The cost of testing in Production: How 10 Industries stopped learning expensive lessons

You've seen it happen. A consultant recommends a major process change, leadership approves the budget, the team spends months implementing it, and then reality hits. The solution doesn't work the way everyone thought it would. By the time anyone realizes the mistake, the cost is already baked in - in dollars, in time, in team morale.

Here's the uncomfortable truth: most business decisions get tested in production. Companies build the new distribution center, redesign the workflow, restructure the supply chain, and hope it works out. When it doesn't, they call it a learning experience and absorb the cost.

Digital twins flip this dynamic. Instead of learning expensive lessons in the real world, you run the experiments virtually first. It's not about having flashy technology for its own sake. It's about answering a simple question before you commit resources: what happens if we're wrong?

Think of it like this. A farmer doesn't plant an entire field with a new crop variety without testing it first. They run a small plot, watch how it performs under real conditions, adjust their approach, then scale what works. Digital twins bring that same practical wisdom to business operations across industries. You're creating a virtual twin of your actual system - whether that's a factory floor, a hospital network, or a retail supply chain - so you can test changes, spot problems, and refine your approach before anything touches the real world. Let's look at how ten different industries are using this approach to move from reactive problem-solving to predictive intelligence.

How the real world is actually using this

Let's talk about what's actually happening in the field, starting with the places where the cost of being wrong is measured in millions.

Manufacturing: When downtime means money walking out the door

Manufacturing plants generate enormous amounts of data. Sensors track vibration, temperature, pressure, wear patterns. Most of that information just accumulates somewhere, unused. The machines keep running until they don't, and then maintenance scrambles to fix whatever broke.

Digital twins change the conversation from "when will this break?" to "what's about to fail and when should we fix it?" You're feeding all that sensor data into a virtual model that mirrors your actual equipment. The model starts recognizing patterns. It notices when a motor's vibration signature changes in ways that historically precede failure. Instead of scheduling maintenance based on a calendar or waiting for catastrophic breakdown, you intervene at the exact right moment.

Automotive plants using this approach have cut maintenance costs by 30% while increasing equipment uptime by 40%. That's not incremental improvement, that's a fundamental shift in how production operates. The predictive maintenance market is growing by $33.72 billion between 2024 and 2029 because the ROI is undeniable.

BMW virtualized all 31 of its production sites using this technology. Fifteen thousand employees can now collaborate on factory layouts in real time, regardless of where they're located. They cut production planning time by nearly a third. When a U.S. assembly plant created a digital twin in January 2024, they used it to redesign production schedules and identify bottlenecks. Monthly cost savings hit 5% to 7%, simply from reducing overtime and catching inefficiencies like excessive material travel and unpredictable process timing.

The practical question for consultants isn't whether this works. It's how quickly you can deploy it for your clients. Low-code platforms have compressed implementation timelines from years to 3-6 months. You're not building everything from scratch. You're connecting existing data sources, configuring the model, and delivering operational value fast enough that clients see ROI within the same quarter.

But here's where it gets interesting. Manufacturing plants don't just have sensor data. They have decades of institutional knowledge - the unwritten expertise of veteran operators who know exactly why machine #7 acts strange on humid days or which production sequence causes the bottleneck everyone complains about. That knowledge walks out the door when people retire. Knowledge graphs solve this by structuring that expertise into something the digital twin can actually use. Instead of one engineer remembering the quirk, the system remembers it, applies it, and continuously improves as new patterns emerge.

Healthcare: The industry drowning in its own data

Hospitals produce 50 petabytes of data every year. Ninety-seven percent of it goes unused. Think about that for a moment. The information is there. The potential insights are there. But the systems aren't set up to turn data into decisions that improve patient outcomes.

Digital twins in healthcare create virtual replicas of patients, organs, and entire hospital systems. In January 2024, researchers at Johns Hopkins University used MRI and PET scans to build digital twins of patients' hearts. Surgeons could simulate catheter ablation procedures for arrhythmia treatment before ever touching the patient. Dr. Manesh Patel, Chief of the Duke Division of Cardiology, put it simply: "They are patient-specific and let doctors compare interventions before surgery."

This isn't theoretical. During COVID-19, Oregon used GE Healthcare's Care Command center to track bed and ventilator availability in real time. Resources went where they were needed most because the virtual model showed exactly what was available and where.

Drug development is another area seeing transformation. Traditional discovery takes a decade and $2.6 billion, with a 96% failure rate. Digital twins act as synthetic patients in clinical trials, simulating control groups to forecast drug efficacy before physical trials even begin. That's time and money saved by testing virtually first.

The U.S. healthcare system still wrestles with fragmented data and demographic biases in predictive models. Federated learning is emerging as a solution, allowing hospitals to train models collaboratively without sharing raw patient data. Programs like the NIH's "All of Us" are addressing demographic gaps by collecting data from at least 1 million individuals from diverse backgrounds. The key is ensuring digital twin outputs are reliable, especially in safety-critical applications. Verification, Validation, and Uncertainty Quantification processes are becoming standard practice to build trust in these systems.

Energy: Managing complexity that never sleeps

The U.S. electric grid was built for one-way electricity flow. Now it has to handle EVs charging unpredictably, solar panels feeding power back into the system, and extreme weather events that weren't part of the original design parameters. Grid operators are making rapid decisions to protect infrastructure that serves millions of people.

Digital twins replicate power plants, transmission lines, distribution networks. When a hurricane is forecasted, operators overlay storm surge models on the digital twin to identify vulnerable substations and position repair crews before the storm hits. Thames Water uses a digital twin of its Deptford water supply network to save approximately 264,000 gallons of water daily. GE Vernova's Digital Wind Farms have increased turbine energy production by up to 20%, adding an estimated $100 million in value over the turbines' lifespans.

For nuclear power, ANSI 3.5 standards ensure simulation accuracy for operator training. Grid operators use these virtual environments to prepare for rare but high-stakes failure scenarios. When the unusual event happens, they've already practiced the response.

The practical challenge is aging infrastructure struggling to meet modern demands. Digital twins help utilities prioritize asset management - repair versus replace decisions based on real-time condition data rather than fixed maintenance schedules. Climate change adds another layer of complexity with unusual weather patterns stressing systems that were never designed for such conditions. EV adoption and distributed solar have created unpredictable consumption patterns that traditional forecasting can't easily accommodate. Digital twins enable integrated distribution planning, incorporating forecasted energy loads, EV adoption rates, and technical constraints into a single operational view.

Smart Cities: When your infrastructure is older than your data

Cincinnati has bridges that have stood for over 150 years. The city uses drones equipped with OptoAI™ real-time analysis to inspect them. What used to take months now takes minutes. The system identifies cracks and rust, automatically generates work orders, enables ongoing monitoring to prevent failures before they happen.

The Orlando Economic Partnership created a 3D digital twin covering 800 square miles across three counties. Built with the Unity gaming engine, it's used for planning rail line expansions and improving hurricane recovery efforts. NASA Langley Research Center in Hampton, Virginia, manages its 764-acre campus with a digital twin covering over 300 buildings. They've implemented nearly 50 applications for space management, flood prevention, and maintenance bidding. Organizations using digital twins report average cost savings of 19% and a 15% reduction in carbon emissions.

Municipal infrastructure generates massive amounts of data stored in disconnected systems. Process flow diagrams, SCADA systems, lab records, sensor networks all operate in silos. Knowledge graphs consolidate this data, resolve naming and formatting inconsistencies, streamline digital twin validation. This makes it easier to transfer platforms across municipal plants.

U.S. municipalities face fragmented data systems and outdated governance processes that slow automated decision-making. Many cities lack in-house expertise to develop and manage digital twins. Regional collaborations are emerging as a practical solution, pooling resources and expertise to integrate digital twins into planning processes.

Supply Chain: When two-day delivery meets reality

Over 90% of American consumers expect deliveries within two to three days. One-third demand same-day service. Meanwhile, warehousing wages surged by more than 30% between July 2020 and July 2024. Traditional supply chain software relies on fixed rules and isolated local optimizations. It can't handle real-time disruptions.

Digital twins create virtual replicas of supply networks. They simulate scenarios using live data like traffic conditions, weather forecasts, delivery schedules to determine the most efficient routes. For predictive maintenance, virtual models of trucks and containers monitor asset health, anticipate failures, minimize downtime.

One global retailer used a digital twin to evaluate distribution center locations. They resized a cross-dock facility, cutting real estate footprint by 50% without sacrificing functionality. U.S. operators leverage digital twins to track carrier performance and adjust policies in real time, reducing transportation costs by roughly 5%. Early adopters report up to 30% improvement in forecast accuracy and reductions in delays and downtime ranging from 50% to 80%.

Low-code platforms enable integration with existing systems like SAP or cloud platforms without requiring full infrastructure overhaul. These platforms automate data transfers, allow incremental implementation, often deliver measurable results within three months. Operational automation can handle up to 85% of planning activities, cutting tasks that used to take days down to minutes.

Supply chain data is scattered across warehouse systems, transportation platforms, IoT devices, legacy databases. Knowledge graphs integrate this fragmented information, providing comprehensive performance views across the entire value chain. This enables transition from heuristic-based management to detailed, dynamic optimization, supporting development of "self-healing" supply chains where predictive AI suggests adjustments to inventory placement and transportation strategies.

The practical approach: focus on high-impact use cases like inventory positioning and route optimization. Start small, target quick wins like improving warehouse throughput or refining delivery routes, build momentum before scaling to full implementation. Create a pipeline of digitized, standardized data, even if it initially relies on static inputs. Train teams to interpret digital twin outputs and adjust workflows to align with real-time insights.

Automotive: Digital before physical

The automotive industry is moving from metal-first to digital-first design. Virtual "digital originals" refine aerodynamics and assembly workflows before producing a single part. Digital twins cut development timelines by 20-50% and reduce quality issues by 25% once vehicles enter production. The adoption across U.S. manufacturing could generate an estimated $37.9 billion in value.

A U.S. assembly plant that adopted a factory digital twin in January 2024 used it to redesign production schedules and identify bottlenecks. By integrating with existing Manufacturing Execution Systems and IoT devices, the plant achieved monthly cost savings of 5% to 7% from reducing overtime and uncovering inefficiencies.

Subscription-based software models make digital twin technology accessible to mid-sized manufacturers. Low-code platforms allow shop floor operators to interact with digital twin data without advanced programming skills. Unified namespace implementation serves as a single source of truth across design, production, and maintenance systems. Starting with a proof-of-concept sandbox environment lets companies test feasibility, refine impact estimates, address challenges before integrating into live operations.

Knowledge graphs capture the unspoken expertise of veteran engineers and line workers, transforming decades of shop floor know-how into actionable data. This is especially useful for modeling complex interactions between software and hardware in modern vehicles. Manufacturers can simulate the impact of over-the-air software updates on vehicle performance before deployment. Chrysler's Sterling Heights Assembly Plant created a virtual experience of its 5-million-square-foot facility, enhancing both consumer engagement and operational transparency.

U.S. manufacturers face integration challenges with legacy systems. Many plants rely on decades-old infrastructure with fragmented data pipelines. Azita Martin, VP and General Manager of Retail and CPG at Nvidia, explains: "Optimizing layouts in the physical world is very capital-intensive and disruptive, but with a physics-based omniverse simulation platform, you can simulate different layouts and see how objects and people behave in them, and more accurately estimate what the throughput would be."

Focus on high-impact use cases like optimizing production schedules and implementing predictive maintenance for critical assets. Start with targeted pilots, test and refine while building technical expertise and organizational readiness. Workforce training is crucial - operators need to understand how to interpret digital twin insights and adjust workflows accordingly.

Aerospace: Where mistakes cost more than money

The aerospace industry uses digital twins to accelerate development and reduce prototyping expenses. Virtual replicas of individual turbine blades to entire aircraft systems enable testing thousands of configurations without costly physical prototypes. This reduces total product development times by 20% to 50% and lowers quality issues by 25% when products reach production. Virtual testing cuts preproduction prototypes from two or three down to just one.

Airbus's Skywise platform connects more than 12,000 aircraft to virtual models, enabling 50,000 users to monitor fleet health in real time and anticipate component wear before failures occur. RTX (formerly Raytheon Technologies) applied digital twin technology to the Pratt & Whitney F119 engine used in the F-22 Raptor, fine-tuning performance and balancing output with service life.

Platforms like AWS IoT TwinMaker simplify digital twin creation with prebuilt components and automation, cutting model development time by up to 50%. These tools integrate diverse data sources like IoT sensors, cameras, monitoring systems into a unified virtual environment without requiring extensive custom coding.

Karen Willcox, Director of the Oden Institute for Computational Engineering and Sciences, explains: "Digital twins bring value to mechanical and aerospace systems by speeding up development, reducing risk, predicting issues and reducing sustainment costs."

In aerospace, where a single design change can have cascading effects over months or years, knowledge graphs help manage complexity. By integrating disconnected predictive models into a cohesive framework, they support decision-making that balances competing priorities across the supply chain.

One challenge is outdated IT systems, some in place for decades. Many engineers still rely on paper blueprints. A phased approach works best: competitive scoping, architecture design, then software development excellence. Establishing a dedicated center of excellence ensures consistent processes across business units. Remember, a digital twin doesn't need to be perfect - it only needs to meet specific capability requirements and deliver clear cost-benefit advantage.

Construction: Testing before building

Construction and real estate leverage digital twins to plan and execute projects virtually before physical work begins. This tackles fragmented workflows and disconnected stakeholders that historically cause delays and budget overruns. Two-way data exchange between physical assets and project participants provides a unified perspective. Investments in data tracking and analytics, including digital twins, are projected to generate $88.6 billion in benefits.

In April 2024, BMO (Bank of Montreal) used Matterport's 3D capture technology to create digital twins for 500 newly acquired Bank of the West branches. Virtual simulation of layouts and rebranding efforts cut over 6,000 hours of manual survey work and saved hundreds of thousands in travel expenses.

A global retailer used a detailed digital twin of its distribution network to test a new cross-dock design. The simulation revealed the facility could be downsized and relocated, reducing real estate needs by 50% while enhancing regional distribution center efficiency by 10%. A steel manufacturer created a digital twin of its supply chain covering 50 production assets and over 300 warehouses. By simulating risks up to 12 weeks in advance, the company achieved a 2-percentage-point improvement in EBITDA and reduced inventory levels by 15%.

Low-code platforms enable organizations to build digital twins without extensive data science expertise. These platforms support agile, iterative approaches, allowing teams to develop proof of concepts within two to three months. Companies report an average of 19% cost savings and 22% return on investment.

Digital twins rely on well-structured semantics through "spatial computing", connecting diverse data sources like real-time IoT inputs, business systems (ERP), reality-capture tools like Lidar, and 3D CAD models. Joshan Abraham, Associate Partner at McKinsey, highlights: "Digital twins will soon become key tools for optimizing processes and decision making in every industry."

Despite potential, implementation challenges include high upfront costs, lack of technical expertise, and fragmented industry structure. A structured three-step approach: create a blueprint to align stakeholders, develop a base digital twin (typically three to six months), enhance capabilities with AI and advanced modeling. Focus on quick wins like optimizing inventory positioning or supporting daily decisions to demonstrate value early.

Retail: When customer expectations outpace operations

Ninety percent of U.S. shoppers expect delivery within two to three days, and a third want same-day service. Warehousing wages surged over 30% between July 2020 and July 2024. Digital twins allow retailers to simulate everything from inventory flows to store layouts, enabling smarter decisions before physical changes.

Walmart rolled out digital twin systems across 4,200 locations, reducing emergency maintenance alerts by 30% and cutting refrigeration costs by 19%. These systems integrate data from point-of-sale, inventory management, and CRM software into a single dashboard.

AI-driven digital twins enhance forecasting by combining internal sales data with external factors like shipping volumes, port activity, industry trends, improving planning accuracy by 20% to 30%. Retailers dynamically adjust safety stock levels based on local and seasonal demand. Some companies report 200% productivity boost and 95% reduction in administrative tasks for store staff.

Low-code platforms hosted in the cloud make adoption easier without large specialized data science teams. These platforms handle IoT integration and analytics while shifting costs from heavy upfront investments to flexible operational expenses. Retailers quickly test changes like new store layouts or customer flow optimizations without significant resource commitments.

Digital twins enhance supply chain tools by organizing and integrating data for predictive analytics. Unified data architectures like Unified Name Space simplify scaling across multiple locations. Leading brands like ASOS, IKEA, and Adidas use digital twins for virtual fitting rooms, helping reduce return rates.

To overcome challenges, retailers should improve data visibility by identifying key inputs and outputs for specific use cases. Use agile, iterative approaches to develop standardized data products. Start with smaller, high-impact projects like optimizing inventory placement or improving daily operational decisions. The global digital twin market is expected to grow to between $125 billion and $150 billion by 2032, at an annual growth rate of 30% to 40%.

Professional Services: Your expertise as infrastructure

For consulting firms, the most critical asset is expertise residing in people's minds, often leaving after each client project. Digital twins offer virtual environments where firms can test strategies, simulate business models, refine knowledge itself. Instead of intuition or static spreadsheets, firms create dynamic 3D models to predict how decisions in infrastructure, business development, and strategy will unfold before committing resources.

In 2024, a procurement team used a digital twin platform to automate 85% of planning processes. What once took days was completed in minutes, with overall cycle planning times slashed by 50%. Ninety-six percent of business leaders acknowledge the value of digital twins, with 62% describing benefits as "immense." Companies deploying these tools report an average of 19% cost savings and 18% increase in revenue.

Modular, low-code platforms enable consulting firms to build and deliver digital twins in as little as three months. These platforms integrate seamlessly with existing IT systems like SAP or cloud environments, eliminating need to overhaul legacy infrastructure. This modular approach allows customization for specific challenges like procurement optimization, supply chain modeling, or factory simulations, while scaling capabilities as client needs evolve.

Despite potential, implementing digital twins comes with obstacles including high initial costs, talent shortages, and difficulties integrating with legacy systems. A phased approach mitigates these challenges. Start with proof of concept delivering quick, measurable results before advancing to complex, live-data simulations. Early focus on data visibility is crucial - identify needed inputs and outputs, then build a roadmap for creating data products addressing multiple high-impact use cases.

Where this goes from here

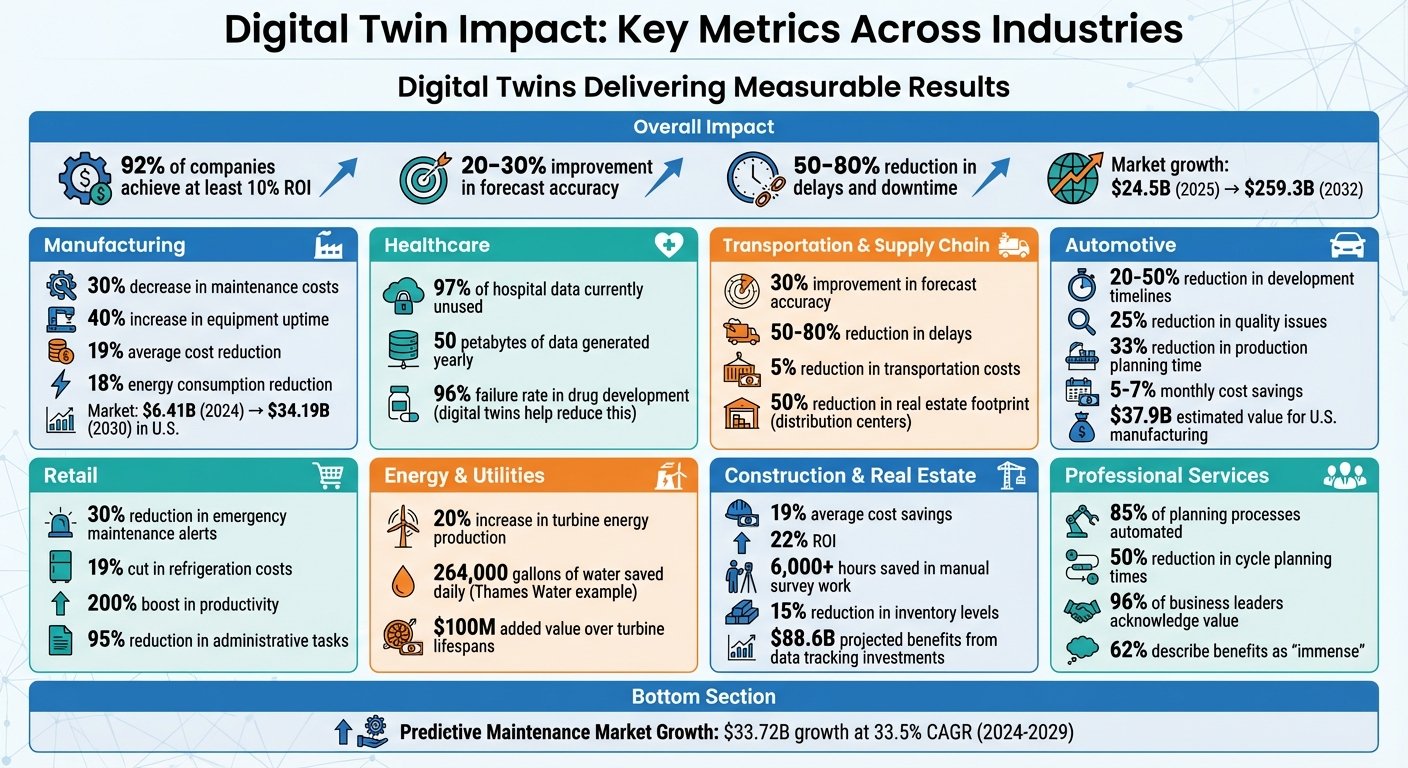

Digital twins are delivering tangible results. Ninety-two percent of companies using them report achieving at least 10% ROI. Early adopters see 20-30% improved forecast accuracy, 50-80% fewer delays, and 5-7% monthly cost savings in production.

The market is expected to expand from $24.5 billion in 2025 to $259.3 billion by 2032. McKinsey puts it plainly: "Digital twins are set to evolve from a nice-to-have technology into a must-have tool for manufacturers of all kinds - and may eventually be required to interact in a fully virtualized supply chain."

But here's what matters more than market projections: the fundamental shift from testing decisions in production to testing them virtually first. That's not a technology trend. That's a change in how risk gets managed.

For consultants, this creates a clear opportunity. Your clients are already sitting on the data. They're already making decisions that carry hidden costs when those decisions turn out wrong. The question isn't whether digital twins work - the industries above have answered that. The question is how quickly you can help your clients move from reactive to predictive, from expensive lessons to virtual testing.

The practical path forward starts with identifying one high-value problem where the cost of being wrong is measurable. A production bottleneck. A supply chain vulnerability. An asset that fails unpredictably. Then build a focused pilot that demonstrates ROI within a single quarter. Use that momentum to scale incrementally, without massive upfront investment or wholesale system replacement.

Knowledge graphs organize the scattered data your clients already have. Low-code platforms compress implementation timelines from years to months. The technology is ready. The question is whether you're positioned to help your clients use it.

If you're looking at digital twins for a specific client challenge and want to talk through the practical implementation - what data you actually need, which platform makes sense for your use case, how to structure a pilot that delivers measurable results in 90 days - that's the conversation we're built for.

Reach out to discuss your specific scenario. No theoretical frameworks, no vendor pitch. Just a direct conversation about whether this approach fits your client's problem and how to execute it if it does.

Common questions about digital twin implementation

How do digital twins help manufacturers save on maintenance costs?

Manufacturers are moving from reactive repairs to predictive maintenance thanks to digital twins. These systems gather real-time data from machinery, tracking temperature, vibration, and wear to monitor equipment health. When unusual patterns emerge, the system forecasts potential failures ahead of time, enabling repairs during planned downtime rather than disrupting operations with unexpected breakdowns. This proactive approach reduces overtime labor costs, decreases need to stockpile spare parts, and extends machinery lifespan by avoiding severe damage. Digital twins also allow manufacturers to run 'what-if' simulations, testing scenarios to refine processes or redesign equipment for easier maintenance.

How do knowledge graphs enhance the functionality of digital twins?

Knowledge graphs significantly enhance digital twin functionality by streamlining data integration, modeling complex contexts, and enabling advanced analytics. They structure intricate relationships between data points, allowing seamless connection and real-time analysis of information from diverse sources. When incorporated into digital twins, knowledge graphs enable more precise simulations, generate predictive insights, and improve decision-making processes. This capability is especially beneficial in manufacturing, healthcare, and energy, where grasping system complexities and interconnections is essential for boosting performance.

How do digital twins enhance supply chain efficiency in retail?

Digital twins offer retailers a real-time virtual model of their supply chain, providing visibility into every step from suppliers and warehouses to store shelves. By integrating live data from sensors, transactions, and market trends, these tools enable businesses to anticipate and address potential disruptions before they happen. This proactive approach helps minimize stockouts, reduce lead times, and ensure inventory is positioned exactly where it's needed. With AI-powered forecasting, digital twins surpass traditional prediction methods, enhancing demand accuracy by up to 30% and cutting delays by 50-80%. This precision supports smarter replenishment schedules, better safety stock management, and lower carrying costs while maintaining strong service levels. Digital twins also allow retailers to test "what-if" scenarios like effects of promotions, seasonal demand shifts, or transportation bottlenecks, providing valuable insights for a more agile and cost-efficient supply chain.